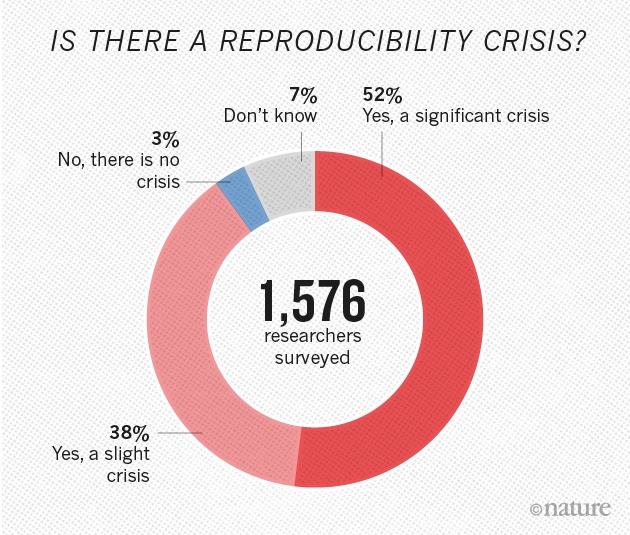

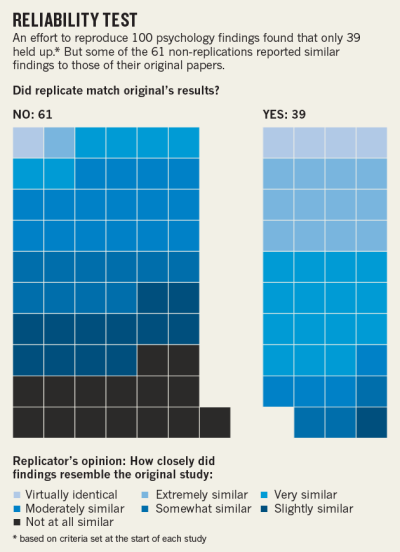

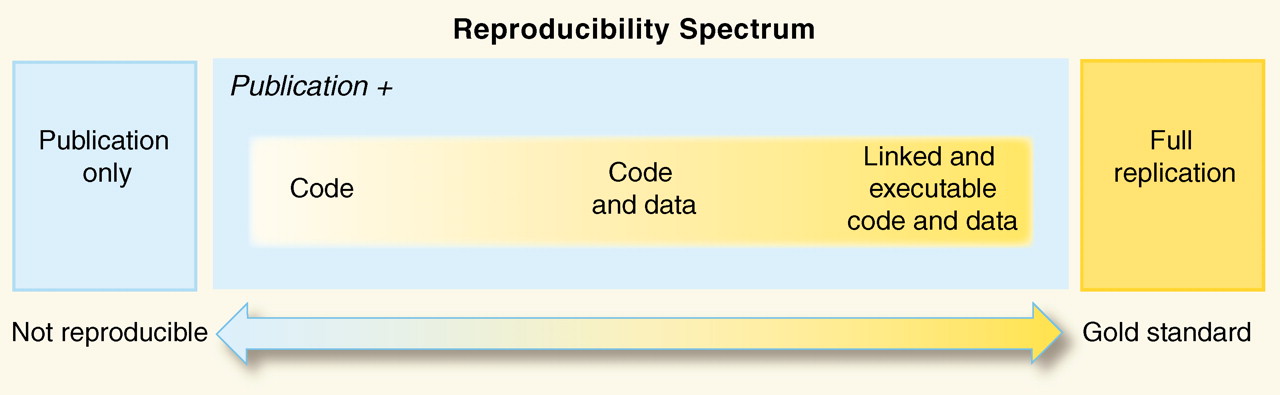

name: title class: Left, middle background-image: url(images/rawpixel/nasa-jupiter.jpg) background-size: cover # .whiteinline[.fancy[Reproducibility: historical notes & concept]] ### .whiteinline[[1.1] Reproducibility & replicability] .whiteinline[Reproducible Research Practices (RRP'21) · April 2021] .right[.whiteinline[Carlos Granell · Sergi Trilles]] .right[.whiteinline[Universitat Jaume I]] --- name: spacecraft class: bottom, middle background-image: url(images/rawpixel/nasa-cygnus-spacecraft.jpg) background-size: cover --- template: spacecraft ## Focus is the art of knowing what to ignore. ### The fastest way to raise your level of performance: Cut your number of commitments in half. --- class: inverse, center, middle # Reproducibility in Science --- class: center # Reproducibility in Science ## Doubt is inherently human and we (scientists) always have doubts! -- ### (Well, that doesn’t mean it’s OK to keep questioning climate change, evolution, and the power of vaccines...) --- class: center # Reproducibility in Science ## Advancing science and knowledge requires -- ### openness -- ### transparency -- ### reproduction -- ### cumulative evidence (replication) --- class: center, middle # Is reproducibility a new problem? -- ### Let's go back to the 17th century... --- class: center # Boyle vs Huygens .pull-left[ <img src="https://upload.wikimedia.org/wikipedia/commons/thumb/a/a3/The_Shannon_Portrait_of_the_Hon_Robert_Boyle.jpg/800px-The_Shannon_Portrait_of_the_Hon_Robert_Boyle.jpg" width="65%"/> [Robert Boyle](https://en.wikipedia.org/wiki/Robert_Boyle) ] .pull-right[ <img src="https://upload.wikimedia.org/wikipedia/commons/a/a4/Christiaan_Huygens-painting.jpeg" width="65%"/> [Christiaan Huygens](https://en.wikipedia.org/wiki/Christiaan_Huygens) ] --- class: center, middle # Boyle's [air-pump](https://en.wikipedia.org/wiki/Air_pump) was one of the first documented disputes over reproducibility... --- class: center # Boyle vs Huygens .pull-left[ ### Huygens reported a new effect he termed "anomalous suspension" in Amsterdam. ### Boyle could not replicate this effect in his own air pump in UK. ### Huygens was invited to UK (1663) to personally help Boyle __replicate__ anomalous suspension of water. ] .pull-right[ <img src="https://upload.wikimedia.org/wikipedia/commons/3/31/Boyle_air_pump.jpg" width="55%"/> ] ??? [Source](https://en.wikipedia.org/wiki/Reproducibility) --- class: center # Newton vs Flamsteed .pull-left[ <img src="https://upload.wikimedia.org/wikipedia/commons/thumb/3/3b/Portrait_of_Sir_Isaac_Newton%2C_1689.jpg/1280px-Portrait_of_Sir_Isaac_Newton%2C_1689.jpg" width="65%"/> [Isaac Newton](https://en.wikipedia.org/wiki/Isaac_Newton) ] .pull-right[ <img src="https://upload.wikimedia.org/wikipedia/commons/1/16/John_Flamsteed_1702.jpg" width="65%"/> [John Flamsteed](https://en.wikipedia.org/wiki/John_Flamsteed) ] --- class: center, middle # Flamsteed's lunar data & Newston's request for raw data -- In 1695, Sir Isaac Newton wrote a letter to the British Astronomer Royal John Flamsteed, whose data on lunar positions he was trying to get for more than half a year. Newton declared that -- > “these and all your communications will be useless to me unless you can propose some practicable way or other of supplying me with observations … __I want not your calculations, but your observations only__.” <a name=cite-noy2019></a>[[NN19](http://dx.doi.org/10.1038/s41563-019-0539-5)] ??? [Kollerstrom, N. & Yallop, B. D. J. Hist. Astron. 26, 237–246 (1995)](https://doi.org/10.1177%2F002182869502600303). --- class: center, middle # Is reproducibility a new problem? -- ### Let's go back to 40 years ago, with the birth of personal computers... --- class: center # Literate programming .pull-left[ ### [Literate programming](https://en.wikipedia.org/wiki/Literate_programming): a new methodology of programming. <a name=cite-knuth1984></a>[[Knu84](https://doi.org/10.1093/comjnl/27.2.97)] ### "Programs are meant to be read by humans, and only incidentally for computers to execute." ### A programme combines human language and code. ] .pull-right[ <img src="https://upload.wikimedia.org/wikipedia/commons/4/4f/KnuthAtOpenContentAlliance.jpg" width="65%"/> [Donald E Knuth](https://en.wikipedia.org/wiki/Donald_Knuth) ] --- class: center, middle # Is reproducibility a new problem? -- ### Let's go back to 30 years ago, with the birth of Internet... --- class: center # Electronic documents .pull-left[ ## Electronic documents give reproducible research a new meaning <a name=cite-claerbout1992></a>[[CK92](https://doi.org/10.1190/1.1822162)] ### Executable digital notebook ### Be open & help others ### Document for future self ] .pull-right[ <img src="images/Claerbout92.png" width="65%"/> ] --- class: center, middle # Is reproducibility a new problem? -- ### No, it is not _NEW_, but it _IS_ still a problem. --- class: left, bottom background-image: url(images/supertramp.jpg) background-size: contain --- class: center # 1,500 scientists lift the lid on reproducibility <a name=cite-baker2016></a>[[Bak16](http://dx.doi.org/10.1038/533452a)] .pull-left[ ### +70% of researchers have tried and failed to reproduce another scientist's experiments ### +50% have failed to reproduce their own experiments ] .pull-right[  ] --- class: center # Limitations & enablers [[Bak16](http://dx.doi.org/10.1038/533452a)] .pull-left[ <img src="images/reproducibility-graphic-online4.jpg" width="65%"/> ] .pull-right[ <img src="images/reproducibility-graphic-online5.jpg" width="70%"/> ] --- # .center[Reproducibility crisis is well covered] - In __scientific studies__ in general, across various disciplines <a name=cite-ioannidis2005></a>[[Ioa05](https://doi.org/10.1371/journal.pmed.0020124)]: _Why most published research findings are false_ - In __economics__ <a name=cite-ioannidis2017></a>[[ISD17](https://doi.org/10.1111/ecoj.12461)]: _The Power of Bias in Economics Research_ - In __medical chemistry__ <a name=cite-baker2017></a>[[Bak17](https://doi.org/10.1038/548485a)]: _Check your chemistry_ - In __neuroscience__ <a name=cite-button2013></a>[[But+13](https://doi.org/10.1038/nrn3475)]: _Power failure: why small sample size undermines the reliability of neuroscience_ - In __psychology__ <a name=cite-baker2015></a>[[Bak15](https://doi.org/10.1038/nature.2015.17433)]: _First results from psychology's largest reproducibility test_ --- # .center[Psychology's largest reproducibility test] .pull-left[ ### Only 39 out ot 100 of the published studies in psychology could be reproduced. [[Bak15](https://doi.org/10.1038/nature.2015.17433)] ] .pull-right[  ] --- class: center, middle # “science is in crisis” narrative is wrong ### <a name=cite-fanelli2018></a>[[Fan18](https://doi.org/10.1073/pnas.1708272114)]: _Opinion: Is science really facing a reproducibility crisis, and do we need it to?_ > The new “science is in crisis” narrative is not only empirically unsupported, but also quite obviously counterproductive. Instead of inspiring younger generations to do more and better science, it might foster in them cynicism and indifference. Instead of inviting greater respect for and investment in research, it risks discrediting the value of evidence and feeding antiscientific agendas. > Therefore, contemporary science could be more accurately portrayed as facing “new opportunities and challenges” or even a “revolution”. __Efforts to promote transparency and reproducibility would find complete justification in such a narrative of transformation and empowerment__, a narrative that is not only more compelling and inspiring than that of a crisis, but also better supported by evidence. --- class: inverse, center, middle ## The concept of reproduction --- class: center, middle # Objectives of scientific research -- ### Discover laws, axioms, rules, etc. and describe them under which condition they apply -- ### Conduct case studies to prove a general principle or theory --- class: center, middle # Objectives of scientific research ### Transfer/publish results to prove validity, veracity, trust in findings -- > One of the pathways by which scientists confirm the validity of a new finding or discovery is by repeating the research that produced it. > Observed inconsistency may be an important precursor to new discovery while others fear it may be a symptom of a lack of rigor in science --- class: center # 'Show me', not 'trust me' <a name=cite-stark2018></a>[[Sta18](https://doi.org/10.1038/d41586-018-05256-0)] .pull-left[ ### 'Show me' = ‘help me if you can’ ### not 'trust me' = not ‘catch me if you can’ > "If I publish a paper long on results but short on methods, and it’s wrong, that makes me untrustworthy." > "If I say: ‘here’s my work’ and it’s wrong, I might have erred, but at least I am honest". ] .pull-right[  ] --- class: center, middle # Today's reality -- ### Computation has a large and increasing role in scientific research <a name=cite-stodden2014></a>[[SM14](https://doi.org/10.5334/jors.ay)] -- ### Many and diverse computational sciences (bio-informatics, geophysics, material science, fluid mechanics, climate modelling, computational chemistry, ...) <a name=cite-barba2021></a>[[Bar21](https://ieeexplore.ieee.org/document/9364769/)] --- class: center, middle # Today's reality ### If results are produced by complex computational processes, -- ### the _methods_ section of a scientific paper is no longer sufficient. --- # .center[The inverse problem <a name=cite-nust2021></a>[[NE21](https://f1000research.com/articles/10-253/v1)]]  --- class: center, middle ### We define _reproducibility_ to mean -- .cold[COMPUTATIONAL REPRODUCIBILITY] --- # .center[Some terms <a name=cite-donoho2009></a><a name=cite-peng2011></a><a name=cite-leek2017></a><a name=cite-barba2018></a>[[CK92](https://doi.org/10.1190/1.1822162); [Don+09](https://doi.org/10.1109/MCSE.2009.15); [Pen11](https://doi.org/10.1126/science.1213847); [LJ17](https://doi.org/10.1146/annurev-statistics-060116-054104); [Bar18](https://arxiv.org/abs/1802.03311)]] .large[__Reproducible research__: Authors provide all the necessary data and the computer codes to run the analysis again, re-creating the results.] .large[__Reproducibility__: A study is reproducible if all of the code and data used to generate the numbers and figures in the paper are available and exactly produce the published results.] --- # .center[Some terms [[CK92](https://doi.org/10.1190/1.1822162); [Don+09](https://doi.org/10.1109/MCSE.2009.15); [Pen11](https://doi.org/10.1126/science.1213847); [LJ17](https://doi.org/10.1146/annurev-statistics-060116-054104); [Bar18](https://arxiv.org/abs/1802.03311)]] .large[__Replication__: A study that arrives at the same scientific findings as another study, collecting new data (possibly with different methods) and completing new analyses.] .large[__Replicability__: A study is replicable if an identical experiment can be performed like the first study and the statistical results are consistent.] .large[__False discovery__: A study is a false discovery if the result presented in the study produces the wrong answer to the question of interest.] --- class: center, middle # Our view <a name=cite-ostermann2017></a><a name=cite-nust2018></a><a name=cite-ostermann2020></a>[[OG17](https://doi.org/10.1111/tgis.12195); [Nus+18](https://doi.org/10.7717/peerj.5072); [Ost+20](http://dx.doi.org/10.31223/X5ZK5V)]: .large[A reproducible paper ensures a reviewer or reader can recreate the computational workflow of a study or experiment, including the prerequisite knowledge and the computational environment. The former implies the scientific argument to be understandable and sound. The latter requires a detailed description of used software and data, and both being openly available.] --- class: center # Reproducibility spectrum [[Pen11](https://doi.org/10.1126/science.1213847)]  --- # Summary ### Reproducibility involves the .coldinline[ORIGINAL] data and code: 1. checking the published manuscript, 1. looking for published data and code, then 1. comparing the results of that data and code to the published results. 1. if they are the same, the study is reproducible; otherwise, it is not. ### Replicability involves .fatinline[NEW] data collection to test for consistency with previous results of a smiliar study. - Replication is to advance theory by confronting existing understanding with new evidence.<a name=cite-nosek2020></a>[[NE20](http://dx.doi.org/10.1371/journal.pbio.3000691 https://dx.plos.org/10.1371/journal.pbio.3000691)] - Replication is accumulated evidence - Even when paper is computationally reproducible, it may fail to be replicated --- # References <a name=bib-baker2015></a>[Baker, M](#cite-baker2015) (2015). _First results from psychology's largest reproducibility test_. URL: [https://doi.org/10.1038/nature.2015.17433](https://doi.org/10.1038/nature.2015.17433). <a name=bib-baker2017></a>[\-\-\-](#cite-baker2017) (2017). "Check your chemistry". In: _Nature_ 548.7668, pp. 485-488. URL: [https://doi.org/10.1038/548485a](https://doi.org/10.1038/548485a). <a name=bib-baker2016></a>[Baker, Monya](#cite-baker2016) (2016). "1,500 scientists lift the lid on reproducibility". In: _Nature_ 533.7604, p. 452–454. DOI: [10.1038/533452a](https://doi.org/10.1038%2F533452a). URL: [http://dx.doi.org/10.1038/533452a](http://dx.doi.org/10.1038/533452a). <a name=bib-barba2018></a>[Barba, L](#cite-barba2018) (2018). _Terminologies for Reproducible Research_. URL: [https://arxiv.org/abs/1802.03311](https://arxiv.org/abs/1802.03311). <a name=bib-barba2021></a>[Barba, Lorena A](#cite-barba2021) (2021). "Trustworthy Computational Evidence Through Transparency and Reproducibility". In: _Computing in Science & Engineering_ 23.1, pp. 58-64. DOI: [10.1109/MCSE.2020.3048406](https://doi.org/10.1109%2FMCSE.2020.3048406). URL: [https://ieeexplore.ieee.org/document/9364769/](https://ieeexplore.ieee.org/document/9364769/). --- # References <a name=bib-button2013></a>[Button, KS, JPA Ioannidis, et al.](#cite-button2013) (2013). "Power failure: why small sample size undermines the reliability of neuroscience". In: _Nature Reviews Neuroscience_ 14.5, pp. 365-376. URL: [https://doi.org/10.1038/nrn3475](https://doi.org/10.1038/nrn3475). <a name=bib-claerbout1992></a>[Claerbout, JF and M Karrenbach](#cite-claerbout1992) (1992). "Electronic documents give reproducible research a new meaning". In: _SEG Technical Program Expanded Abstracts 1992_. Society of Exploration Geophysicists, pp. 601-604. URL: [https://doi.org/10.1190/1.1822162](https://doi.org/10.1190/1.1822162). <a name=bib-donoho2009></a>[Donoho, DL, A Maleki, et al.](#cite-donoho2009) (2009). "Reproducible research in computational harmonic analysis". In: _Computing in Science & Engineering_ 11.1, pp. 8-18. URL: [https://doi.org/10.1109/MCSE.2009.15](https://doi.org/10.1109/MCSE.2009.15). <a name=bib-fanelli2018></a>[Fanelli, D](#cite-fanelli2018) (2018). "Opinion: Is science really facing a reproducibility crisis, and do we need it to?" In: _Proceedings of the National Academy of Sciences_ 115.11, pp. 2628-2631. URL: [https://doi.org/10.1073/pnas.1708272114](https://doi.org/10.1073/pnas.1708272114). <a name=bib-ioannidis2005></a>[Ioannidis, JP](#cite-ioannidis2005) (2005). "Why most published research findings are false". In: _PLOS Medicine_ 2.8, p. e124. URL: [https://doi.org/10.1371/journal.pmed.0020124](https://doi.org/10.1371/journal.pmed.0020124). --- # References <a name=bib-ioannidis2017></a>[Ioannidis, JP, TD Stanley, et al.](#cite-ioannidis2017) (2017). "The Power of Bias in Economics Research". In: _The Economic Journal_ 127.605, pp. F236-F265. URL: [https://doi.org/10.1111/ecoj.12461](https://doi.org/10.1111/ecoj.12461). <a name=bib-knuth1984></a>[Knuth, DE](#cite-knuth1984) (1984). "Literate Programming". In: _The Computer Journal_ 11.2, pp. 97-111. URL: [https://doi.org/10.1093/comjnl/27.2.97](https://doi.org/10.1093/comjnl/27.2.97). <a name=bib-leek2017></a>[Leek, JT and LR Jager](#cite-leek2017) (2017). "Is Most Published Research Really False?" In: _Annual Review of Statistics and Its Application_ 4, pp. 109-122. URL: [https://doi.org/10.1146/annurev-statistics-060116-054104](https://doi.org/10.1146/annurev-statistics-060116-054104). <a name=bib-nosek2020></a>[Nosek, Brian A. and Timothy M. Errington](#cite-nosek2020) (2020). "What is replication?". In: _PLOS Biology_ 18.3, p. e3000691. ISSN: 1545-7885. DOI: [10.1371/journal.pbio.3000691](https://doi.org/10.1371%2Fjournal.pbio.3000691). URL: [http://dx.doi.org/10.1371/journal.pbio.3000691 https://dx.plos.org/10.1371/journal.pbio.3000691](http://dx.doi.org/10.1371/journal.pbio.3000691 https://dx.plos.org/10.1371/journal.pbio.3000691). <a name=bib-noy2019></a>[Noy, Natasha and Aleksandr Noy](#cite-noy2019) (2019). "Let go of your data". In: _Nature Materials_ 19.1, p. 128–128. DOI: [10.1038/s41563-019-0539-5](https://doi.org/10.1038%2Fs41563-019-0539-5). URL: [http://dx.doi.org/10.1038/s41563-019-0539-5](http://dx.doi.org/10.1038/s41563-019-0539-5). --- # References <a name=bib-nust2021></a>[Nüst, Daniel and Stephen J Eglen](#cite-nust2021) (2021). "CODECHECK: an Open Science initiative for the independent execution of computations underlying research articles during peer review to improve reproducibility". In: _F1000Research_ 10, p. 253. ISSN: 2046-1402. DOI: [10.12688/f1000research.51738.1](https://doi.org/10.12688%2Ff1000research.51738.1). URL: [https://f1000research.com/articles/10-253/v1](https://f1000research.com/articles/10-253/v1). <a name=bib-nust2018></a>[Nust, D, C Granell, et al.](#cite-nust2018) (2018). "Reproducible research and GIScience: an evaluation using AGILE conference papers". In: _PeerJ_ 6, p. e5072. URL: [https://doi.org/10.7717/peerj.5072](https://doi.org/10.7717/peerj.5072). <a name=bib-ostermann2017></a>[Ostermann, FO and C Granell](#cite-ostermann2017) (2017). "Advancing science with VGI: Reproducibility and replicability of recent studies using VGI". In: _Transactions in GIS_ 21.2, pp. 224-237. URL: [https://doi.org/10.1111/tgis.12195](https://doi.org/10.1111/tgis.12195). <a name=bib-ostermann2020></a>[Ostermann, Frank, Daniel Nüst, et al.](#cite-ostermann2020) (2020). _Reproducible Research and GIScience: an evaluation using GIScience conference papers_. DOI: [10.31223/x5zk5v](https://doi.org/10.31223%2Fx5zk5v). URL: [http://dx.doi.org/10.31223/X5ZK5V](http://dx.doi.org/10.31223/X5ZK5V). <a name=bib-peng2011></a>[Peng, RD](#cite-peng2011) (2011). "Reproducible Research in Computational Science". In: _Science_ 334.6060, pp. 1226-1227. URL: [https://doi.org/10.1126/science.1213847](https://doi.org/10.1126/science.1213847). --- # References <a name=bib-stark2018></a>[Stark, PB](#cite-stark2018) (2018). "Before reproducibility must come preproducibility". In: _Nature_ 557.7706, pp. 613-614. URL: [https://doi.org/10.1038/d41586-018-05256-0](https://doi.org/10.1038/d41586-018-05256-0). <a name=bib-stodden2014></a>[Stodden, V and SB Miguez](#cite-stodden2014) (2014). "Best Practices for Computational Science: Software Infrastructure and Environments for Reproducible and Extensible Research". In: _Journal of Open Research Software_ 2.1, p. e21. URL: [https://doi.org/10.5334/jors.ay](https://doi.org/10.5334/jors.ay).