Reproducibility assessment

If you are very interested in a paper, you want to understand the inner aspects pretty well. So, you give reproducibility a try. It is however advisable to assess first the level of pre-reproducibility of a paper (Stark 2018) before attempting a real reproduction. That is, do you –as reader/reviewer of the paper– have full access to all the resources necessary to perform the actual reproduction?

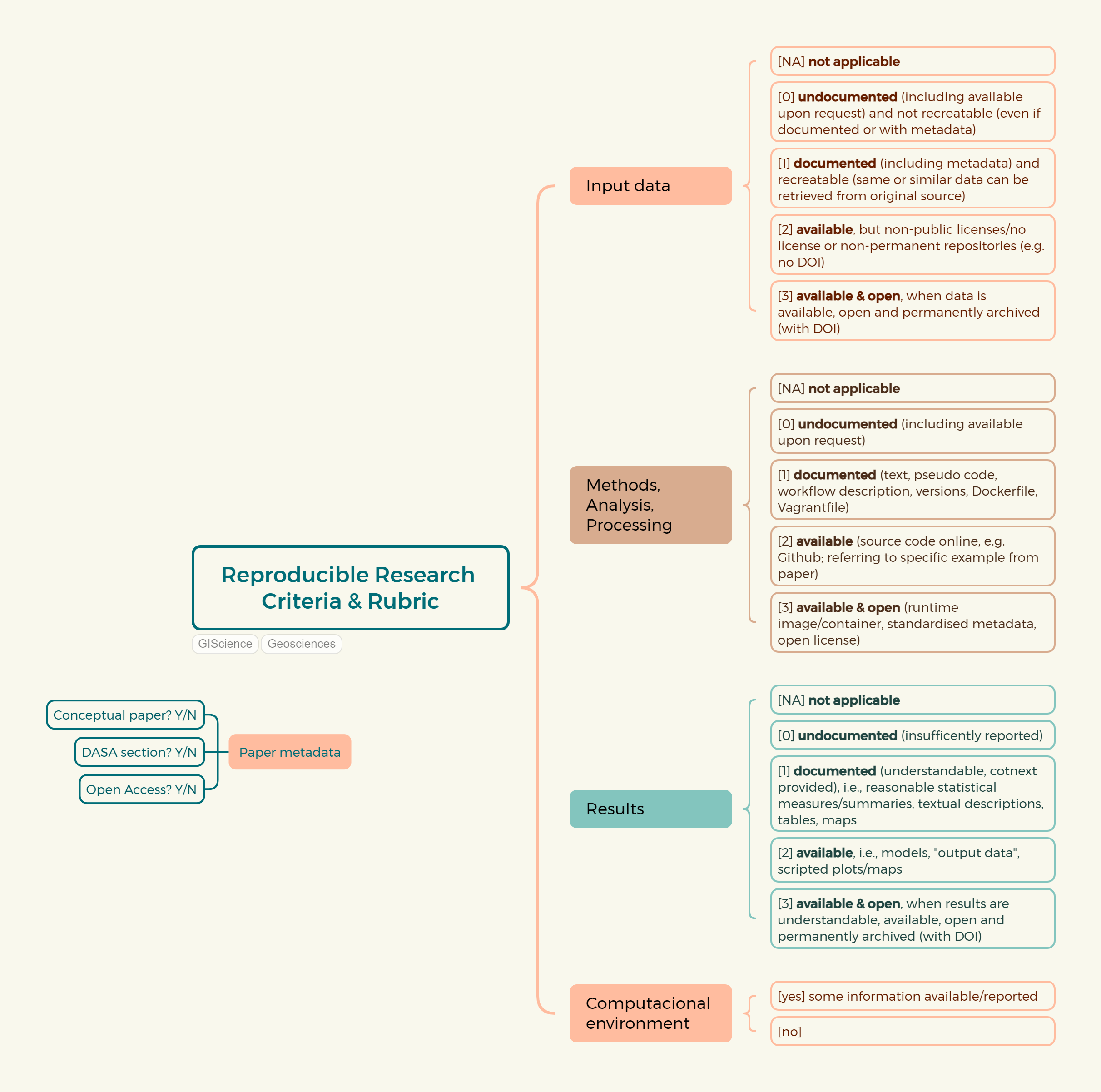

In the context of the Reproducible Research @ AGILE, we proposed a set of criteria to evaluate the degree of reproducibility of an article (Nüst et al. 2018). In that work, we evaluated a selection of papers published in the AGILE conference series. Using the same criteria framework and methodological approach, we evaluated conference articles published in the GIScience conference series (Ostermann et al. 2021).

Criteria

With the experience gained in the above studies, we revised the set of reproducibility criteria to refine them for an ongoing longitudinal study (Nüst, Granell, and Ostermann 2023). The resulting criteria, which are compatible with the original ones, are shown in Figure 1, which in turn align nicely with the welcome illustration (see Figure 2) of The Turing Way project.

Assessment example

As example, let’s investigate this paper (or choose your own if you have a good contender): Enhanced Multi Criteria Decision Analysis for Planning Power Transmission Lines

What do you think their scores should be?

| NA | Undocumented [0] | Documented [1] | Available [2] | Available & open [3] | |

|---|---|---|---|---|---|

| Input data | |||||

| Methods, Analysis, Processing | |||||

| Results |

| Yes | No | |

|---|---|---|

| Computational environment |

Reproduction plan

Choose one of your recent published paper or a current draft, and carry out a self-assessment exercise in which you must assign a level (Not Applicable [NA], Undocumented [0], Documented [1], Available [2], Available and Open [3]) for all of reproducibility criteria except the computational environment:

Based on the reproduction plan template (doc, pdf), fill only the first two columns as the content of the planned measures column depends on the Unit 3 Practices